-

-

Personalised Podcasts

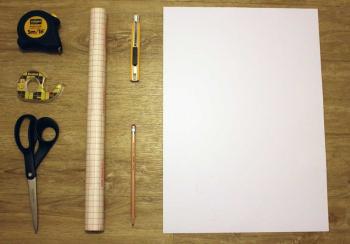

I've been working on a fun little side project for a while – The Morning Briefing: a personalised podcast.

I listen to a lot of tech and business news podcasts but something like Morning Brew Daily works on the US news cycle. I wanted a morning show that was ready for whenever o'clock in the morning you get up in your country.

After learning about NotebookLM, I set about wrangling a herd of LLMs, TTSs and RSS and came up with a proof-of-concept "Podcast on Demand". I can pick the virtual presenters, they discuss the latest stories from whatever topics I've subscribed to, add in a bed of music and I've got a customised podcast ready for me when I wake up.

And it all runs locally without using OpenAI or Google!

Here's a podcast from a couple of months ago:

-

Teaching a New Dog Old Tricks

What do Prince of Persia, Apollo 11, and Spacewar! have in common?

They were built by geniuses, powered by sheer grit, and written in programming languages that have mostly been buried with floppy disks and punch cards. They’re ancient, brittle, and borderline unreadable by modern standards—and yet, they’re masterclasses in creative problem-solving. The kind of code that teaches you how to think, not just how to import a library.

One of the reasons I enjoy building Komment is that I get to use it to read these projects without having to dust off old Assembly references – I don’t have to be fluent in Pascal, 6502 Assembly, or ye olde pre-ANSI C to learn from them.

⸻

🕰 Why Old Code Still Has Something to Teach Us

We tend to think the past has nothing for us—especially in tech. Everything moves so fast. New frameworks, new languages, new “best practices” every 18 months.

But when you look at the code behind something like the original Prince of Persia, you see a developer solving incredibly hard problems with almost no resources. No GPU. No high-level abstractions. Just a few kilobytes and some really clever hacks.

Studying that codebase is like looking at a stunning cathedral. Then getting closer and seeing it's built with lego. Then getting closer and seeing that the lego bricks were hand carved.

The codebase has some clever things to learn from but it’s like trying to learn German by sitting down with a copy of Goethe printed in Fraktur. It’s possible. But it’s going to take a long time to figure out what's actually going on.

The Problem with Legendary Codebases

Here’s the experience of trying to learn from one of these classic codebases:

- You download the repo. It’s full of files with extensions you’ve never seen.

- The comments are in all caps. Some are in French. Some lie.

- There’s no structure. No docs. Just a cryptic main loop and prayer.

You want to understand the sprite engine. Instead, you’re 400 lines deep in something called MOVE.S that appears to control both jumping and wall collisions and maybe the music system? It’s chaos. Beautiful, historical chaos.

Enter Komment: The Code Archaeologist’s Toolkit

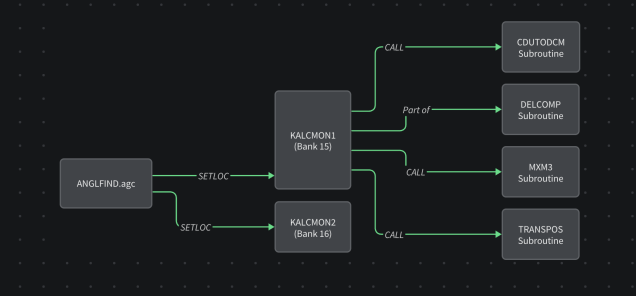

One of my favourite things to do with Komment is point it at one of these projects and have the system parse the codebase and turn it into something much easier to grok.

And it comes with diagrams

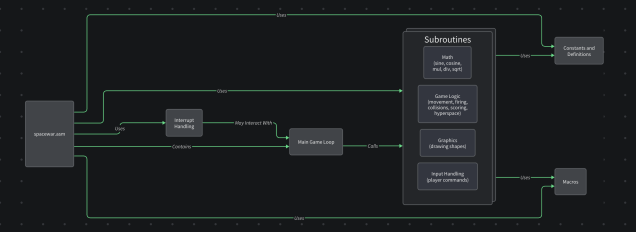

🕹 Case Study: Following the Flow of Spacewar!

Take Spacewar!—one of the earliest digital games, built in 1962. It was designed on a PDP-1, a machine that predated basically everything except carbon.

I threw it into one of our pipelines and got out a breakdown of how it does what it does.

You’re no longer staring into the abyss. You’re walking through the code like a museum exhibit, with a helpful guide whispering in your ear.

Check out the Spacewar! wiki on Komment

-

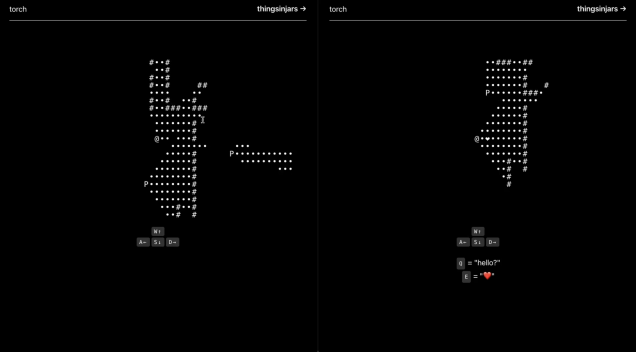

Torch? Again?

It must be re-run season.

After recently rebuilding Torch in ASCII using Phoenix and Elixir, I was inspired to do it yet again but with the original ray-casting-on-canvas look.

This is a super-simple express server with websockets and in-memory state. The more complicated multi-player raycasting makes a return this time though (no list comprehension shortcuts when we're doing it this way, unfortunately.

I still think there's some fun to be had in this game and maybe in another 15 years or so, I'll rebuild it again.

Github Repo: https://github.com/thingsinjars/torch-js

-

Torch in Phoenix

In an old, old callback, I decided to revisit a game design from… almost 14 years ago?!

I was looking for an excuse to play with Elixir and Phoenix and decided the best thing to do would be grabbing an old idea and throwing some new tech at it.

And, to mix things up, why not restyle it to look like a very old idea?

Using ASCII for this meant that I could actually do a lot of the visibility and ray casting using simple list comprehension (i.e. intersect all other player's 'lighted' cells with the current player's 360 ray cast visibility)

Github Repo: https://github.com/thingsinjars/torch

-

Monster Coffee Break

I wrote an RPG Adventure Guide.

I've been having fun recently with Lasers & Feelings and initially just wanted to make a quick little hack based in a fun and colourful world. Ideas turned into notes turned into scenarios turned into enhancements...

Long story short, I ended up with a 30 page adventure guide, two conflict mechanics, a bunch of set pieces and inspiration and a load of colourful characters.

Monster Coffee Break combines RPG, fantasy and comedy horror tropes with the heady and intoxicating world of corporate bureaucracy.

MonsterCorp is a sprawling corporate office catering to all kinds of monsters, from goo blobs to banshees to vampire bats. Employees run everything from nightmarish supply chains to scream-powered HR seminars.

The office is absurdly bureaucratic. Monsters need forms, signatures, and permits for everything.

This is definitely not a full RPG system. It just grew out of my own notes while playing with Lasers & Feelings. It's meant to inspire your own game, provide some interesting ideas and generally be a bit daft.

Some things might not make sense, some might not work. That's fine, just roll with it.

Aside: I then used the opportunity to create a simple little one-page website for it and throw in some fun CSS to do with scroll snapping.

-

Snex – Multiplayer Snake

It's been a few years since I built anything in Elixir so I decided this weekend to refresh my memory.

Rather than build yet another location-based API, I decided to try a game.

Combining super-simple gameplay and Phoenix Channels, I eventually ended up with Snex - Multiplayer Snake.

On page load, you are assigned a random session ID so you can copy-paste the URL and share it with any one to play on the same board.

I've currently got it deployed on Gigalixir.

The performance on the deployed version isn't great due to network latency. Essentially, the game ticks every 100ms and sends an update to each player so if you're moving left then press down, typically the server will process one more

leftevent before yourdownarrives. There are plenty of blog posts about handling latency, not just in multiplayer online games but also specific discussions on multiplayer online snake. I decided I could either dig into refreshing my knowledge of that or stick with refreshing my knowledge of Elixir and Phoenix. I went with the latter. -

Operations: A Maths Game

Operations

1+ players

The aim is to get the highest number possible after using each of your tokens.

There is 1 die

Each player has 4 tokens with different symbols on:

+−×÷Each player rolls the die and the number they get is their starting number.

Lowest score starts. If there's a draw, youngest of those starts.

Each round:

- Roll the die

- Choose one of your operations.

- Perform your operation with the new number and your existing number. Try to get the highest score

- Discard your operation token. You only get to use each operation once.

Note: When the calculation is a division with a remainder, you can either discard the remainder or continue with decimals, depending on who is playing.

Example game:

2 players.

A rolls 2, B rolls 3. A has the lowest starting number so they start

- Round 1

- A rolls a 4. They decide to use their

+operation. They now have 6. - B rolls a 1. They use their

÷. They still have 3.

- A rolls a 4. They decide to use their

- Round 2

- A rolls 6. They use their

×. They have 36. - B rolls 5. They use their

×. B now has 15

- A rolls 6. They use their

- Round 3

- A rolls another 6. They've already used their

×so they have to either subtract 6 or divide by 6. They use−. They have 30 - B rolls 2. They

+it. B has 17

- A rolls another 6. They've already used their

- Round 4

- A rolls another 6! Now they only have their

÷left. They have to divide 30 by 6. Now they have 5. - B rolls 3. They have their

−left. B has 14.

- A rolls another 6! Now they only have their

B wins.

Variations

- For advanced maths, add in the power and root symbols

^√ - Try to get the lowest score instead of the highest.

- Try to get the lowest score without going below zero.

-

Pi-ku

A what? A pi-ku?

To quote Maths Week Scotland:

A pi-ku is a poem that follows the form of a haiku, but instead of the 5-7-5 haiku pattern, the syllables in a pi-ku follow the number of digits in the mathematical constant pi (π).

So, instead of 5-7-5, a pi-ku would follow the pattern 3-1-4 (-1-5-9-2…etc.)

Of course, I couldn't avoid having a go myself, could I?

Approximation of Pi

Pi is three

Well...

Three and a bit.

…ish.

The 'bit' is quite small.

Full-time Score of the Final of the World Circle Geometry Ratio Tournament 2023

Radius:

2

Diameter:

1

Subjectively Reviewing areas of Mathematics

Algebra?

Fine.

Geometry?

Fun.

Trigonometry?

Partial Differential Equations?

Both Good.

Fractal Geometry?

Looks simple at first.

Gets... tricky

When you look closer.

Marginal

Fermat's Last?

Gasp!

I Found a Proof!

Which,

Unfortunately...

This poem is too short to contain. -

RFID Timesheet

I've done a lot of projects over the holidays. This is a quick collection of notes to remind myself later.

I used an RC522 RFID scanner (originally part of a Tonuino project) and wired it to a Particle Photon. Whenever an RFID tag was held to it, it would publish an event containing the ID of the card to the Particle Cloud. When the card was removed, it would publish a blank event. This is the code from the Photon:

// Photon RFID-RC522 // A2 SDA // A3 SCK // A4 MISO // A5 MOSI // D2 RST // GND GND // 3V3 3.3V #include

#include #define LED_PIN D7 constexpr uint8_t RST_PIN = D2; // Configurable, see typical pin layout above constexpr uint8_t SS_PIN = A2; // Configurable, see typical pin layout above MFRC522 mfrc522(SS_PIN, RST_PIN); // Create MFRC522 instance uint32_t lastMillis = 0; bool wasPresent = false; void setup() { pinMode(LED_PIN, OUTPUT); Serial.begin(9600); // Initialize serial communications with the PC while (!Serial); // Do nothing if no serial port is opened (added for Arduinos based on ATMEGA32U4) SPI.begin(); // Init SPI bus mfrc522.PCD_Init(); // Init MFRC522 mfrc522.PCD_DumpVersionToSerial(); // Show details of PCD - MFRC522 Card Reader details Serial.println(F("Scan PICC to see UID, SAK, type, and data blocks...")); } void loop() { // Look for new cards if ( ! mfrc522.PICC_IsNewCardPresent()) { if(wasPresent) { if(! mfrc522.PICC_IsNewCardPresent()) { Serial.println("No card"); Particle.publish("rfid_scan", "", 60, PRIVATE); wasPresent = false; } } else { } return; } // Select one of the cards if ( ! mfrc522.PICC_ReadCardSerial()) { return; } char cardID[32] = ""; for (byte i = 0; i < mfrc522.uid.size; i++) { char hex[4]; snprintf(hex, sizeof(hex), "%02x", mfrc522.uid.uidByte[i]); strncat(cardID, hex, sizeof(cardID)); } if (millis() - lastMillis < 1000) { return; } lastMillis = millis(); if(!wasPresent) { wasPresent = true; Particle.publish("rfid_scan", cardID, 60, PRIVATE); Serial.printlnf("Card: %s", cardID); // Turn on the LED digitalWrite(LED_PIN, HIGH); // Leave it on for one second delay(1s); // Turn it off digitalWrite(LED_PIN, LOW); // Wait one more second delay(1s); } // Dump debug info about the card; PICC_HaltA() is automatically called //mfrc522.PICC_DumpToSerial(&(mfrc522.uid)); } I then used IFTTT to read these events and write them to a Google Spreadsheet. This is the IFTTT Excel code:

{{CreatedAt}} |||{{EventContents}}||| =IF(ISODD(ROW()), "Started", "Stopped") ||| =IF(ISEVEN(ROW()),ROUND(((DATEVALUE(REGEXEXTRACT(INDIRECT(ADDRESS(ROW(),COLUMN()-3,4)), "w+ d{2}, d{4}")) + TIMEVALUE(REGEXEXTRACT(INDIRECT(ADDRESS(ROW(),COLUMN()-3,4)), "d{2}:d{2}[A|P]M$"))) - ( DATEVALUE(REGEXEXTRACT(INDIRECT(ADDRESS(ROW()-1,COLUMN()-3,4)), "w+ d{2}, d{4}")) + TIMEVALUE(REGEXEXTRACT(INDIRECT(ADDRESS(ROW()-1,COLUMN()-3,4)), "d{2}:d{2}[A|P]M$")))) * 24, 2),"")||| =IFERROR(VLOOKUP(INDIRECT(ADDRESS(ROW(), COLUMN()-3),4), I$3:J$10, 2, FALSE), "")We now have a physical time tracker that can be used to log time spent on individual projects. Super-handy for Jenni's freelance work.

-

IoT Button

I've done a lot of projects over the holidays. This is a quick collection of notes to remind myself later.

I took the insides out of an old IKEA Spøka nightlight and squeezed in a Particle Photon, a battery shield and a battery then soldered the nightlight's on/off switch onto some jumper cables and wired that in. I now have an internet-connected button that looks cute.

Still no idea what to do with it but it’s fun.

Here's the code that's running on the Photon:

int led = D7; // Built-in LED int pushButton = D6; // Old Spøka momentary switch

bool wasUp = true;

void setup() { pinMode(led, OUTPUT); pinMode(pushButton, INPUT_PULLUP); }

void loop() { int pushButtonState;

pushButtonState = digitalRead(pushButton);

if(pushButtonState == LOW) { // If we push down on the push button digitalWrite(led, HIGH); // Turn ON the LED if(wasUp) { Particle.publish("Spooky pressed"); wasUp = false; } } else { digitalWrite(led, LOW); // Turn OFF the LED wasUp = true; }

}

When you press the button, you get a message published to the Particle Cloud.

-

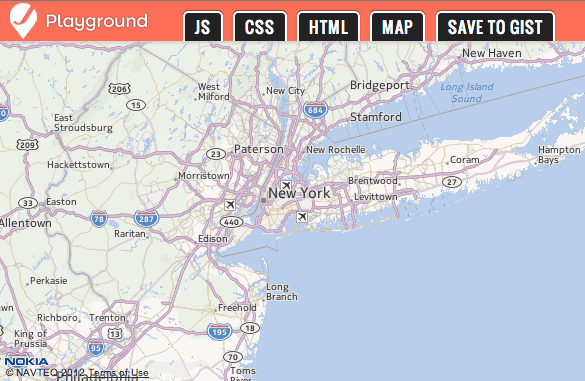

HERE Maps Web Component

At the weekend, I found myself starting another little side project that needed a map. And, predictably, I chose to use a HERE map.

In my day job, I use a lot of Vue but I do tend to prefer web components where possible, wrapping them in Vue only where necessary. This, then, is the natural outcome:

Now I can embed HERE maps with a single web component.

<here-map api-key="1234-54321" latitude="52.5" longitude="13.4" zoom="12" ></here-map>Or include markers directly:

<here-map api-key="1234-54321" latitude="52.5" longitude="13.4" zoom="12"> <here-map-marker latitude="52.5" longitude="13.4" /> <here-map-marker latitude="52.501" longitude="13.405" icon="https://cdn3.iconfinder.com/data/icons/tourism/eiffel200.png" /> </here-map> -

Tape EP

I've been on a bit of a 70s/80s funk/soul organ thing recently. Think 'Vulfpeck' plays the 'Theme from Hill Street Blues'…

-

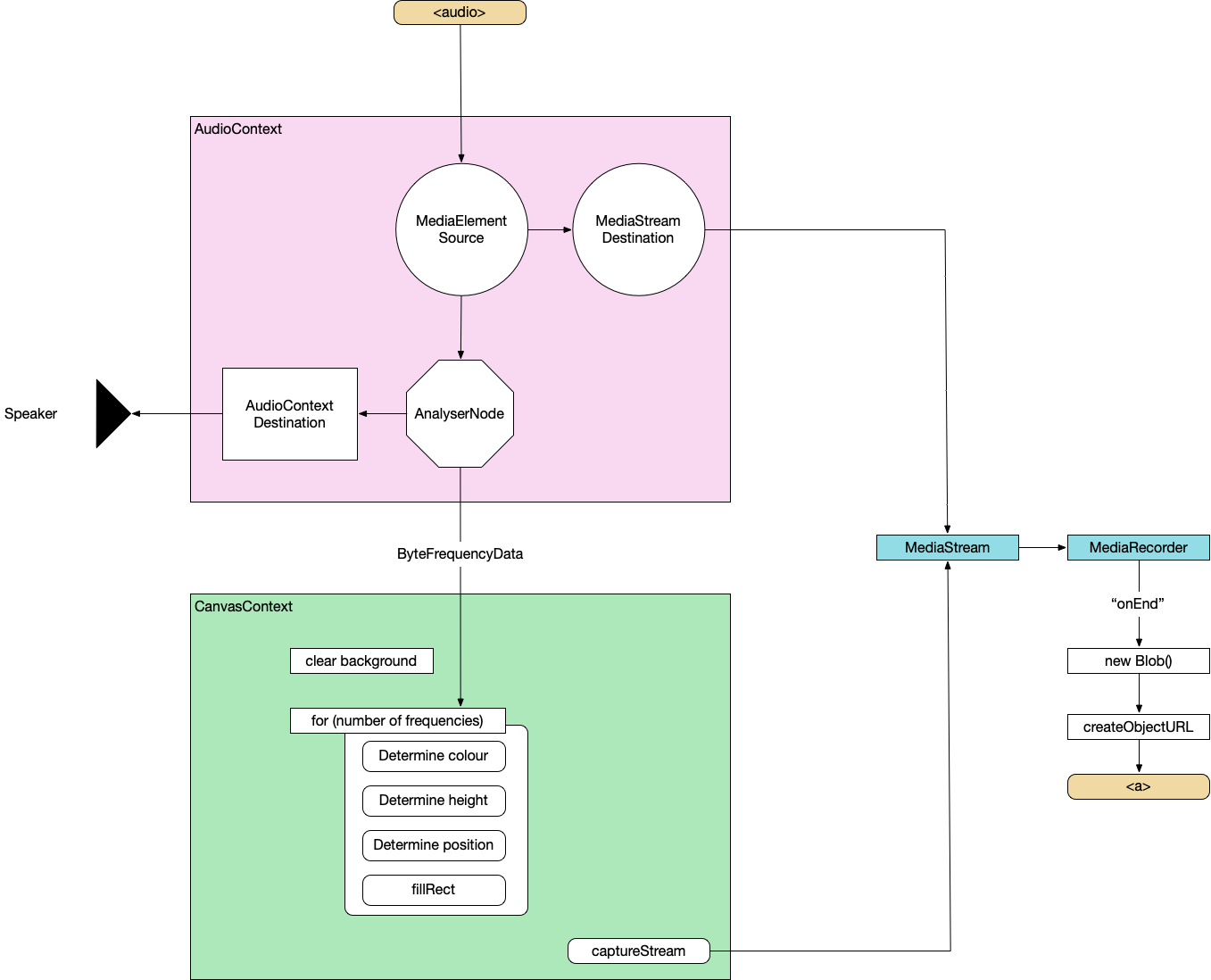

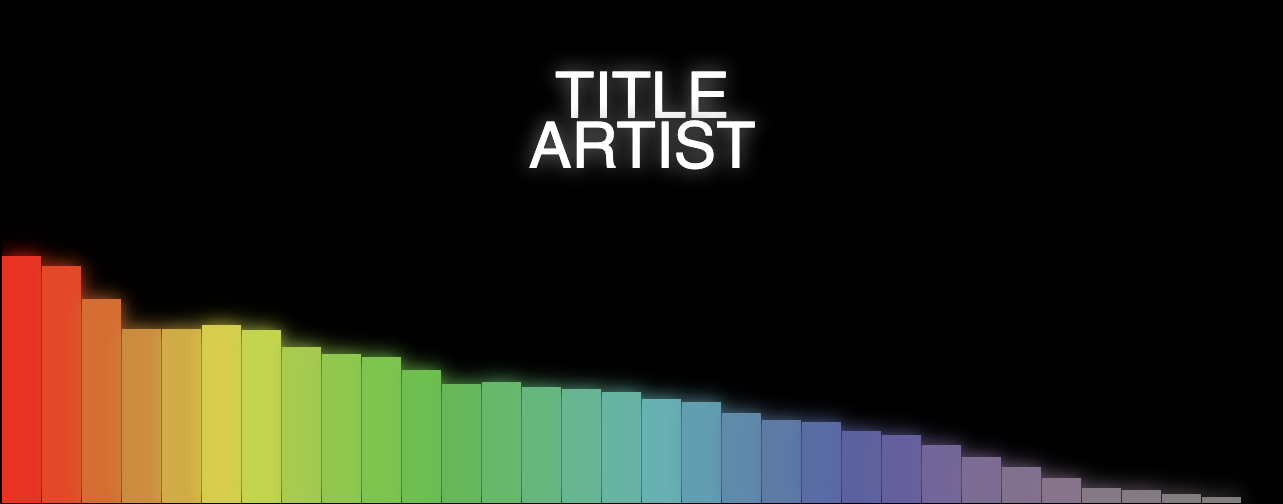

Using Web APIs to generate music videos

Plan

A very simple web app that generates a music visualisation from an audio file and renders it to a downloadable movie file. Most importantly, it does this all on the client side.

To do this, we'll combine the Web Audio API, Canvas API and MediaStream Recording API. I'll not be providing the complete source code as we go through it but just enough to point you in the right direction.

For more detail, I recommend the following:

I've used this as the basis to create all the music videos for my next album. You can see examples in my previous post.

TL;DR

Here's the complete architecture of what we're building:

And here's the finished build: All-In-One Music Visualiser

Basic HTML

First, let's just set up some basic HTML to show the visualisation, hold the audio and take in some parameters.

<canvas></canvas> <audio controls></audio> <label>Track title: <input type="text" id="track" placeholder="Track title"> </label> <label>Artist name: <input type="text" id="artist" placeholder="Artist name"> </label> <label>Audio File: <input type="file" id="file" accept="audio/*" /> </label>Read in audio file

const audio = document.querySelector('audio'); audio.src = URL.createObjectURL(document.querySelector('[type=file]').files[0]); audio.load();Web Audio API

Wire up Web Audio

Create an AudioContext, source the audio from the

<audio>element, prepare a streaming destination for later and connect theinto theout.const context = new AudioContext(); const src = context.createMediaElementSource(audio); const dest = context.createMediaStreamDestination(); src.connect(dest);Attach an analyser node

We want our visualisation to react to the music so we need to wire in an AnalyserNode. Thankfully, this handles all the complicated audio processing so we don't need to worry about it too much. We also attach the analyser to the destination node of the AudioContext so that we can hear it through the computer speakers. Not strictly necessary but it's nice to be able to hear what we're doing.

const analyser = context.createAnalyser(); src.connect(analyser); analyser.connect(context.destination);Prepare to sample frequency

the

fftSizeis essentially "How detailed do we want the analysis to be?". It's more complicated than that but this is all the detail we need for now. Here, we're using 64 which is very low but 512, 1024 and 2048 are all good. It depends on the actual visualisations you want to produce at the other end.The

smoothingTimeConstantis approximately "How much do we want each sample frame to be like the previous one?". Too low and the visualisation is very jerky, too high and it barely changes.analyser.fftSize = 64; analyser.smoothingTimeConstant = 0.8; const bufferLength = analyser.frequencyBinCount; // Prepare the array to hold the analysed frequencies const frequency = new Uint8Array(bufferLength);Finally grab the values from the

<input>elements.const titleText = document.querySelector("#track").value.toUpperCase(); const artistText = document.querySelector("#artist").value.toUpperCase();Canvas API

Now we do the standard canvas setup – grab a reference to the canvas, set our render size (doesn't need to be the same as the visible size of the canvas) and prepare a

2dcontext.Prepare Canvas

const canvas = document.getElementById("canvas"); canvas.width = 1280; canvas.height = 720; const ctx = canvas.getContext("2d");Render loop

Execute this on every render frame.

function renderFrame() { // schedule the next render requestAnimationFrame(renderFrame); // Grab the frequency analysis of the current frame analyser.getByteFrequencyData(frequency); // Draw the various elements of the visualisation // This bit is easy to modify into a plugin structure. drawBackground(ctx); drawBars(ctx, frequency); drawText(ctx, titleText, artistText); }The various visualisation elements:

function drawBackground(ctx) { ctx.fillStyle = 'white'; ctx.fillRect(0, 0, canvas.width, canvas.height); }function drawBars(ctx, frequency) { const widthOfEachBar = (canvas.width / frequency.length); // Loop over data array for (let i = 0; i < frequency.length; i++) { const heightOfThisBar = frequency[i]; // Base the colour of the bar on its index const h = 360 * index; // Base the saturation on its height const s = 100 * (heightOfThisBar/256); const l = 50; const color = `hsl(${h}, ${s}%, ${l}%)`; ctx.fillStyle = color; // Add a little shadow/glow around each bar ctx.shadowBlur = 20; ctx.shadowColor = color; // Draw the individual bar ctx.fillRect(x, canvas.height - heightOfThisBar, widthOfEachBar - 1, heightOfThisBar); x += (widthOfEachBar); } }function drawText(ctx, titleText, artistText) { ctx.fillStyle = 'white'; ctx.textAlign = 'center'; ctx.font = '4em sans-serif'; ctx.fillText(titleText, canvas.width/2, canvas.height/2 - 25); ctx.fillText(artistText, canvas.width/2, canvas.height/2 + 25); }By this point, we can load a supported audio file and see some pretty pictures reacting to the music.

MediaRecorder

This section is copied almost word-for-word from StackOverflow

First, we'll create a combined

MediaStreamobject from the audio data and the canvas data.const chunks = []; // here we will store our recorded media chunks (Blobs) const stream = canvas.captureStream(); // grab our canvas MediaStream let combined = new MediaStream([ ...stream.getTracks(), ...dest.stream.getTracks(), ]);Next, we start recording that data chunk-by-chunk. We'll save it as webm.

const rec = new MediaRecorder(combined, { audioBitsPerSecond : 128000, videoBitsPerSecond : 2500000, mimeType : 'video/webm' });When the recorder receives a chunk of data, store it in memory.

rec.ondataavailable = (e) => chunks.push(e.data);When we finish recording, combine the chunks and send them to an export method

rec.onstop = (e) => exportVid(new Blob(chunks, { type: "video/webm" })); rec.start();This is sometime necessary to avoid async timing issues when loading the audio data. It doesn't hurt, at least.

audio.addEventListener("durationchange", () => { setTimeout(() => rec.stop(), Math.floor(audio.duration * 1000)); }The final video export. We convert the combined chunks (the Blob) into an object URL and create an anchor that lets us download it from the browser.

function exportVid(blob) { const a = document.createElement("a"); a.download = "myvid.webm"; a.href = URL.createObjectURL(blob); a.textContent = "download"; document.body.appendChild(a); }This export call will be triggered after the audio has finished. You load an audio file, watch the visualisation play through, when it's finished, you click the download link and you get a webm.

So now, we have the basis for a complete music video generator – audio in, video file out.

Try the basic version out – All-In-One Music Visualiser.

Or watch a video generated using this basic version (as a bonus, this music was also automatically generated in the browser but that's a topic for another day):

Don't forget, you can also find me on Spotify.

-

Music Visualisation

While working on my next album (Minimum Viable Product of a Misspent Youth), I decided to have a go at building a music visualiser.

No particular reason why, I've just never done it before. It involves attaching a Web Audio API Analyser Node to a Canvas then outputting the whole thing using a MediaRecorder.

The next post has details on how the basics of this approach works and how to build your own and here are a couple of examples of the visualisations.

btw, while you're waiting, why not check out my previous albums on Spotify?

-

It’s A Ghost's Life

Here's a fun, spooky little song for Hallowe’en.

Writing the lyrics took about 5 minutes. Writing the music took another 5. Recording and mastering it took about an hour.

Making the darned Pac-Man maze inside Minecraft took about 6 hours. No joke.

I think this means I'm better at recording music than I am at playing Minecraft and I'm okay with that.

-

Awsm Street

My lovely other half and I have launched an online shop selling unisex kids' clothes:

The idea came about after child #1 complained about the clothes in the shops getting duller and more grey the older he got. One Skateboarding Unicorn sketch later and Awsm Street was born.

You can now find Highland Cow Hoodies, Porridge t-shirts and Kid-friendly day planners.

-

Same place, different time

This is the same tune recorded in two completely different styles.

Piano & double-bass:

Lo-fi hip-hop:

-

Stand in the Sunset

I decided to re-record an old tune.

-

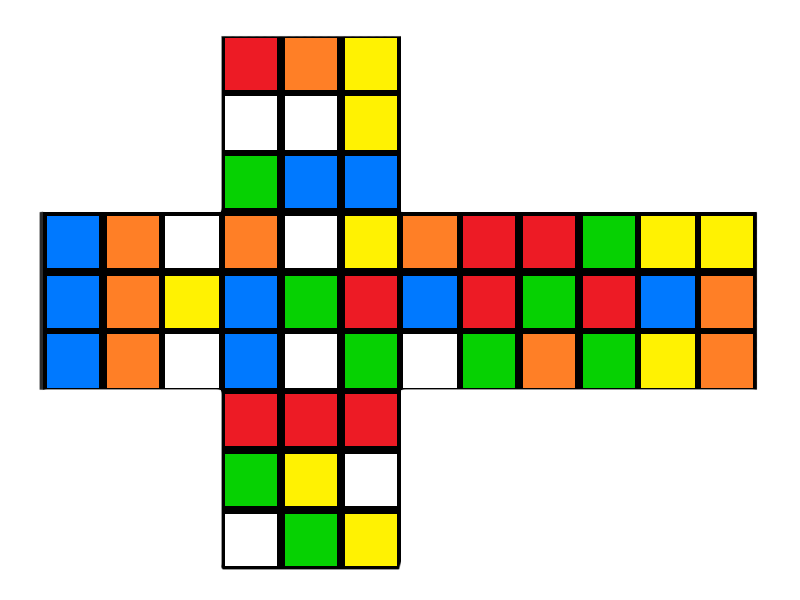

Line-by-line: Flat Cube Web Component

Line-by-Line breakdowns go into excessive – and sometimes unnecessary – detail about a specific, small project. Be prepared for minutiae.

Here, I'll go through the FlatCube web component line-by-line. It is a single web component that draws a flattened-out representation of a Rubik's Cube (or other 3x3 twisty puzzle) based on a string passed in that describes the positions of the pieces. A cube contains six faces. A face contains nine pieces.

You'll probably want to have the full code open in another window to see this in context.

The component

Usage

<flat-cube facelet="UUUUUUUUURRRRRRRRRFFFFFFFFFDDDDDDDDDLLLLLLLLLBBBBBBBBB" />Line-by-line

First, all WebComponents must extend the

HTMLElementclass. If you're building with a library such as LitElement, you might extend a different class but that class will ultimately extendHTMLElement.class FlatCube extends HTMLElement {The

constructoris called every time a FlatCube element is created, not just once per page load.constructor() {We have to call the constructor on HTMLElement so that all the behind-the-scenes plumbing is taken care of.

NOTE: If we don't do this, we can't use

this.super();Now set up some internal variables for the FlatCube itself.

this.facesbecomes an array representing the order of faces in the facelet string.this.faces = 'URFDLB'.split(''); this.facelet = false;We attach the shadow DOM to this element so that we can access it easily during the

renderphase.this._shadowRoot = this.attachShadow({ mode: 'open' });Then we create the template (see below) and trigger the first render to actually show the element on screen.

this.template = this.createTemplate(); this.render(); }Style

It isn't essential to separate the component's

CSSinto another method but I like to do it to keep everything nice and tidy.style() {By using template literals, we can write a block of plain CSS.

return `The

:hostCSS selector references the element itself. It's kinda likethisbut in CSS.NOTE: It can only be used from inside the Shadow DOM

:host {I want this component to be able to be used inline or as a block item so I'm specifying

inline-block. If the context it ends up being used in requires it to be block, it's possible to wrap it in another element.display: inline-block;Skinning and scaling

In this web component, one of the main goals of the implementation was the ability to easily change the size of the component and the colours of the faces.

Luckily, CSS variables make it super easy to make components skinnable and the calc function is very useful for scaling.

The base measurement

All the dimensions – the full component width, the faces, the individual pieces – are multiples of the

--flat-cube-face-widthvalue. This is passed in from the containing CSS by specifying a value for--flat-cube-facebut if it is not specified, we want a fallback of100px.--flat-cube-face-width: var(--flat-cube-face, 100px); }Now the styles for the complete element. Set position to be

relativeso that we can absolutely position the individual faces..flat-cube { position: relative;And specify the element to be the height of 3 faces and the width of 4. This is where the

calcfunction comes in handy, especially in a web component intended to be reusable and seamlessly scalable.height: calc(3 * var(--flat-cube-face-width)); width: calc(4 * var(--flat-cube-face-width));I'm using

outlinerather than border for the lines between the pieces so I want to add a 1px margin around the outside to prevent clipping.margin: 1px; }Each individual face shares the same class

.face {Use the value passed in as the base measurement.

height: var(--flat-cube-face-width); width: var(--flat-cube-face-width);Each face is absolutely positioned inside the containing

.flat-cubeelement.position: absolute;But rather than specify exact positions for each individual piece in a face, we use flexbox to lay them out automatically. We draw the pieces in order then let them wrap onto the next line,

display: flex; flex-wrap: wrap;I wanted to specify the width of each piece as a simple ⅓ of the face width. In order to do that, I used

outlinerather thanborderasborderactually takes space in the element whereoutlinedoesn't.outline: 1px solid var(--flat-cube-outer, black); }These are simply the

topandleftpositions of the individual faces. We don't really need to go line-by-line here..U-face { top: 0; left: var(--flat-cube-face-width); } .L-face { top: var(--flat-cube-face-width); left: 0; } .F-face { top: var(--flat-cube-face-width); left: var(--flat-cube-face-width); } .R-face { top: var(--flat-cube-face-width); left: calc(2 * var(--flat-cube-face-width)); } .B-face { top: var(--flat-cube-face-width); left: calc(3 * var(--flat-cube-face-width)); } .D-face { top: calc(2 * var(--flat-cube-face-width)); left: var(--flat-cube-face-width); }Using the child selector to access the pieces inside the face.

.face > div {As I mentioned above, we want to calculate the width of the pieces simply as ⅓ of a face so we use

outline. The alternative would be to calculate the pieces as (⅓ of (the face width minus 2 * the internal border width)). That sounds mistake-prone.width: calc(var(--flat-cube-face-width)/3); height: calc(var(--flat-cube-face-width)/3); outline: 1px solid var(--flat-cube-inner, black); }Again, this is just colours. We don't need to go line-by-line. The only thing to note is that each piece has a fallback colour specified in case the containing application doesn't pass one in.

.U-piece { background-color: var(--flat-cube-up, #ebed2b); } .L-piece { background-color: var(--flat-cube-left, #ff6b16); } .F-piece { background-color: var(--flat-cube-front, #6cfe3b); } .R-piece { background-color: var(--flat-cube-right, #ec1d35); } .B-piece { background-color: var(--flat-cube-back, #4db4d7); } .D-piece { background-color: var(--flat-cube-down, #fffbf8); }And finally, we close off our template literal and end the

stylemethod.`; }Template

Now we build up the actual DOM of the element. We've done a lot of styling so far but, technically, we've nothing to apply the styles to. For that, we're going to build up the structure of faces and pieces then attach the styles.

structure() {At this point, we have a couple of choices. We can either build this structure once and update it or build it fresh every time we need to make a change. The latter is easier to write but the former has better performance. So let's do that.

This is the

createTemplatemethod we called in theconstructor. It is called only once for each instance of the component so we don't need to go through the whole building process every time.createTemplate() {First, create a new

template. Templates are designed for exactly this case – building a structure once and reuse it several times.const template = document.createElement('template');Then we attach the styles we defined earlier:

template.innerHTML = `<style>${this.style()}</style>`;And, finally, actually create the first element that actually appears in the component. This is the div that contains everything. We also add the

.flat-cubeclass to it.const cubeElement = document.createElement('div'); cubeElement.classList.add('flat-cube');The

this.facesarray we defined in the constructor comes back here. We loop over each face we require and create the DOM for it.this.faces.forEach((face, i) => {A

divto contain the face with the shared.faceclass for the size and the specific class for the position and colour –.U-face,.B-face, etc.const faceElement = document.createElement('div'); faceElement.classList.add('face'); faceElement.classList.add(`${face}-face`);Now we create the individual pieces. If we wanted to make this component customisable so that it could represent cubes with a different number of pieces(2x2, 4x4, 17x17, etc.), we'd use a variable here instead of

9.for (let j=0; j < 9; j++) {Now we call out to the preparePiece method (see below) without an element to make sure the piece has the right class assigned to it before we append the piece to the face.

faceElement.appendChild(this.preparePiece(i, j)); }By the time we get here, we have a

facediv with 9piecedivs appended. Now we can add that to thecube.cubeElement.appendChild(faceElement); });Do that for each face and we have a div containing a completed

flat-cubewhich we can append to the template.template.content.appendChild(cubeElement);And return the template to the

constructor.return template; }Updating

Now we have the structure in a template, we can grab a copy of it any time we need to update the cube.

updateTemplate() {Passing

truetocloneNodemeans we get a deep clone (containing all the nested faces and pieces) rather than a shallow clone (just the top-level element).const update = this.template.content.cloneNode(true);We loop over each

faceand then each piece (div) in eachfaceto update it.update.querySelectorAll('.face').forEach((face, i) => { face.querySelectorAll('div').forEach((piece, j) => {We're using the same method here as we did to create the individual pieces (code reuse is A Good Thing) but this time we're passing in the piece we already have rather than asking the method to create a new one.

this.preparePiece(i, j, piece);The

updatevariable now contains an updated DOM representing the currentfaceletstring.}); }); return update; }This method takes the

i(index of the face) andj(index of the piece) we need to figure out which colour this piece needs to be. It also takes an optional argument ofpiece. If we don't provide that – the way we do in the initialcreateTemplatecall – piece will be a newly created div. If we do provide that argument, we'll update whatever is passed in instead.preparePiece(i, j, piece = document.createElement('div')) {We have to map the facelet string – default: "UUUUUUUUU...etc" – into the two-dimensional structure of faces and pieces. Okay, technically, it's a one-dimensional mapping of a two-dimensional mapping of a three-dimensional structure. But... let's just not.

const start = (i * 9) + j; const end = (i * 9) + j + 1;This means "If we don't have a facelet string, just colour the piece according to what face it is in, otherwise, colour it according to the i,j position in the facelet string".

piece.className = !this.facelet ? `${this.faces[i]}-piece` : `${this.facelet.slice(start, end)}-piece`;Once we've updated the piece, return it so it can be included in the content.

return piece; }We call this method in the constructor and every time we want to update.

render() {Empty out the element content

this._shadowRoot.innerHTML = '';And replace it immediately with the content we generate with the update method from above.

this._shadowRoot.appendChild(this.updateTemplate()); }This is how we register the component to listen for changes. This getter returns an array listing the attributes we want to listen for.

static get observedAttributes() {There's only one attribute we care about listening to. Any change to the

faceletattribute will cause us to re-render.return ['facelet']; }The other part of registering for changes to the attributes. This is the handler that is invoked with the

nameof the changed attribute (useful when you're listening to a lot of attributes), theoldValue(before the change) and thenewValue(after the change).attributeChangedCallback(name, oldValue, newValue) {We only care about the

newValuebecause we've only registered a single listener and we don't need the oldValue.this.facelet = newValue;Then we trigger a new render to update the state of the element.

this.render(); }And we close out our

FlatCubeclass.}Listening for events

The final part of the puzzle is to register our new element with the browser so that it knows what to do when it sees our element. We do this by passing our new element to the

CustomElementRegistry.I like to check if my element has already been registered. Without this, including the script twice by accident will trigger an error that the user of the component isn't necessarily going to recognise.

if (!window.customElements.get('flat-cube')) {To register, you pass the tag name you want to use and the element class.

NOTE: tag names for custom components must contain a hyphen.

customElements.define('flat-cube', FlatCube); }

And that's it. Every line looked at and, hopefully, explained.

Let me know what you would have done differently or if there are any (ideally small) projects you'd like me to look at line-by-line.

-

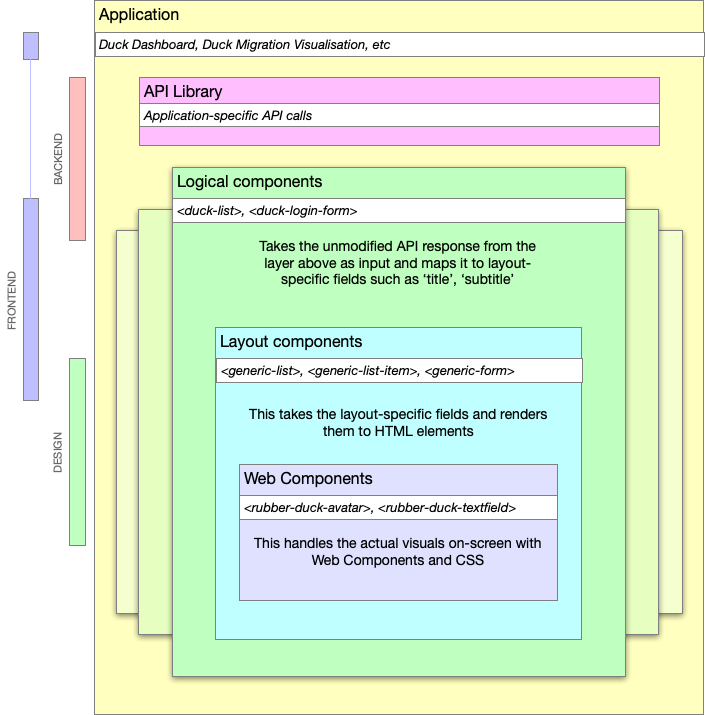

Application Layers

On recent web app projects in HERE Tracking, I've been using a layered component structure that fits particularly well with frontends that access and interact with JSON APIs.

The primary reason for structuring our apps this way is that it gives us a lot of freedom in our workflow and still fits well within the larger HERE structure with horizontal design teams that align across the multiple products. This works as a way to enable parallel contributions from everybody across the engineering teams.

The layers are:

- Application

- Library (JS library to access the API)

- Logical (maps business objects to layout concepts)

- Layout (renders layout components)

- Components (low level elements and design)

And, generally, these layers are within the areas of expertise of the Backend, Frontend and Design specialists.

It shouldn't be necessary to say this but just to make sure I'm not misunderstood: it's important to note that none of these roles is limited to the scope below, this is just a general 'areas of expertise' guide. See my previous post about shared responsibilities.

- Backend teams create the API and implement the JS library. If possible, also implement the basic logical component which performs whatever business logic is required.

- Frontend teams build the application out of components, further maintain the logical components and the mapping between logical and layout components

- Design teams implement the core web components and company-wide design system of UX, UI, mental models, etc. This layer can also be based upon an open-source design system such as Carbon or Material.

Of course, the backend team can modify the web components if they have the inclination just as the design team couuld make improvements to the database if they are able to improve the product.

Example

NOTE: The example below is mostly Vue-like but this layered approach doesn't rely on any framework, language or coding style. It's a way to split and share responsibilities.

Rubber Duck Inc. make GPS-enabled rubber ducks. They have a dashboard where customers can see the location of their ducks. The dashboard includes an overview list of ducks.

Backend

The Backend team extend the Duck definition (stored in their duck-ument database) to include a new 'icon' field then update the

GET /ducksendpoint that allows you to receive a list of all the ducks you own.Sample response:

{ "data": [{ "id": 123, "name": "Hugh", "colour": "hotpink, "icon": "star", }, [{ "id": 321, "name": "Anthony", "colour": "yellow", "icon": "dot", }], "count": 2 }They check to see if the JS library needs updating (if they are using automated code generation, this might already be done). It doesn't, it already returns the full

dataarray of the response:fetch(`${api}/ducks`) .then(response => response.json) .then(json => json.data)The data is rendered in the web app using a logical web component

<duck-list :ducks="ducks"/>The engineer digs one step deeper (into the 'logical' or 'application components' library) and sees that the

duck-listcomponent wraps thegeneric-listcomponent but with a few modifications to the data structure.<template> <generic-list :items="items"/> </template> <script> : props: { ducks: Array, }, data() { return { items: this.ducks.map(duck => ({ title: duck.name, subtitle: `This duck is ${duck.colour}`, })) }; }, : </script>And then modifies it to also pass the icon into the

generic-listso that each item looks like:{ title: duck.name, subtitle: `This duck is ${duck.colour}`, icon: duck.icon }Frontend

In a parallel task, the frontend specialist can be improving the

generic-listcomponent. This component doesn't do much except create a set ofgeneric-list-itemelements.<template> <ul> <generic-list-item for="item in items" :item="item"> </ul> </template>Each

generic-list-itemis built from basic web components from the company's DuckDesign language:<template> <li> <rubber-duck-title>{{title}}</rubber-duck-title> <rubber-duck-subtitle>{{subtitle}}</rubber-duck-subtitle> </li> </template>Frontend can then improve this to take advantage of the new data structure. Handily, there's a

rubber-duck-avatarcomponent. That should work here:<template> <li> <rubber-duck-avatar if="icon">{{icon}}</rubber-duck-icon> <rubber-duck-title>{{title}}</rubber-duck-title> <rubber-duck-subtitle>{{subtitle}}</rubber-duck-subtitle> </li> </template>Design

So close, except the alignment's not quite right... Frontend has a chat with design and they decide that, while this could be solved in the

generic-list-itemcomponent (or even in theduck-listor the application layer), having an icon next to a title is a more generic requirement so it should be solved in the lowest design component layer:rubber-duck-avatar + rubber-duck-title { margin-left: 0; }Design tweaks the alignment of the

rubber-duck-avatarcomponent and deploys it company-wide to all product teams. Every team benefits from the shared library, the DuckDashboard team gets to show off their new duck icons, everybody helped complete the product story and nobody got hurt.

Conclusion

Admittedly, this does lead to having multiple individual repositories for a single application

- dashboard-app

- duck-api.js

- dashboard-components

- layout-components

- duck-design-web-components

But it does give each team the flexibility to contribute beyond their core area and not be blocked by other teams.

Let me know what you think or how you'd improve it. Do you already use an approach like this?

-

Colouring a Rubik's Cube with CSS variables

I was playing around with the flick keyboard from the last post and decided that I could do with a way to draw the cube. There are plenty of existing cube render tools out there (https://codepen.io/Omelyan/pen/BKmedK, http://joews.github.io/rubik-js/, https://cubing.net/api/visualcube/) but I felt like making my own because I needed something to do with my hands while watching the second season of Dead To Me.

What came out was a self-contained web component using CSS variables, fallback styles and calculations to produce a nicely customisable element:

Default cube

<flat-cube facelet="UUUUUUUUURRRRRRRRRFFFFFFFFFDDDDDDDDDLLLLLLLLLBBBBBBBBB" />Scrambled with "M' U F R E R E2 M' U' M' F2"

<flat-cube facelet="BDDFBFUURDRBUUBLDULLFULRDUFLBUDFRDDFRLBRDFLLFRFULRBRBB" />Same again but with different colours:

:root { --flat-cube-up: blanchedalmond; --flat-cube-left: orangered; --flat-cube-front: lawngreen; --flat-cube-right: rebeccapurple; --flat-cube-back: dodgerblue; --flat-cube-down: darkslategrey; --flat-cube-inner: white; --flat-cube-outer: white; } }

The configuration of the pieces is defined by a "facelet" string. This is a way of representing a configuration of a 3x3 twisty puzzle by enumerating the faces like this:

+------------+ | U1 U2 U3 | | | | U4 U5 U6 | | | | U7 U8 U9 | +------------+------------+------------+------------+ | L1 L2 L3 | F1 F2 F3 | R1 R2 R3 | B1 B2 B3 | | | | | | | L4 L5 L6 | F4 F5 F6 | R4 R5 R6 | B4 B5 B6 | | | | | | | L7 L8 L9 | F7 F8 F9 | R7 R8 R9 | B7 B8 B9 | +------------+------------+------------+------------+ | D1 D2 D3 | | | | D4 D5 D6 | | | | D7 D8 D9 | +------------+For example, a solved cube is represented by:

UUUUUUUUURRRRRRRRRFFFFFFFFFDDDDDDDDDLLLLLLLLLBBBBBBBBBWhile the scrambled version shown above is:

BDDFBFUURDRBUUBLDULLFULRDUFLBUDFRDDFRLBRDFLLFRFULRBRBBI chose this representation purely because I've seen it used in other cube modelling projects.

In my demo page, I include the https://github.com/ldez/cubejs library and use that to translate move strings into facelet strings. It would be possible to include this directly in the web component and would improve the usability at the cost of a bit of extra complexity inside the component. That would allow using the component like this:

<flat-cube moves="M' U F R E R E2 M' U' M' F2" />Which does look nicer.

Style

Throughout the component, I have tried to use CSS variables and the calc function as much as possible to allow the component to be restyled and scaled as needed while offering sensible fallbacks.

For example, the styles to define a face include a calculated size with a fallback:

:host { --flat-cube-face-width: var(--flat-cube-face, 100px); } .face { height: var(--flat-cube-face-width); width: var(--flat-cube-face-width); outline: 1px solid var(--flat-cube-outer, black); }While the faces each have a CSS variable to allow styling them along with a fallback:

.U-piece { background-color: var(--flat-cube-up, #ebed2b); }In action

-

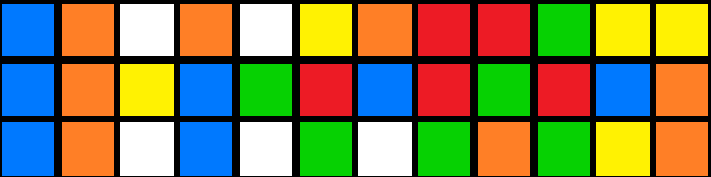

Rubik's Keyboard

For anybody who has a bit of a technical, problem-solving mind (I'm going to guess that's literally anybody reading this), there's a high likelihood that you have not only played with or owned a Rubik's cube but also attempted to solve one using a step-by-step guide.

Notation

Most guides are written using 'Singmaster Notation' where F denotes a clockwise rotation of the side facing the solver, U' is an anticlockwise rotation of the uppermost layer, and so on.

This notation is used to describe not only solving steps but also scrambles when applied to an already solved cube. The following scramble, for example:

L R' D2 U' B D2 F2 D F' L2 F2 R' D' L R2 D U2 L F' L' B2 D U R2 F' D' L' R D2 UProduces this:

Kana Flick

In seemingly unrelated news, the standard way to type Japanese characters on a smartphone is using a Kana Flick Keyboard.

This style of keyboard groups characters together so that you essentially touch the key to select the consonant, move in one of four directions (or stay still) to select the vowel and let go to confirm. Put that way, describing it takes a lot longer than actually doing it.

Rubik's flickboard

This is a long preamble to say that I was thinking through a new game design the other day when I had an idea about a variation on the kana flick keyboard that could be used to enter Rubik's cube move notation:

Note: It doesn't yet include 'fat' moves (lowercase variations which denote moving two slices of the cube rather than just one),

E,Sor rotations (x,y,z).It only works on iOS for now because it was a Sunday-evening sofa hack while rewatching Voyager on Netflix.

-

Toast Guide

-

One product, many owners

or: "What you are is not what you do"

Imagine you're on a bank heist. You're literally right in the middle of the job. Your expert team consists of some very talented criminals. There's The Hacker, The Driver, The Money Man, The Explosives Expert, You.

While The Hacker is rewriting the safe door control circuit's firmware to speed up the clock and release the time-lock, you find the computer controlling the security cameras. Do you make a note on a ToDo list?

- Remind the Hacker to delete the security footage [ ]

Or do you grab the mouse and select

File > Delete Security Footage?(Yes, it is that easy, you specifically chose this bank to hit because it has terrible security)

Once you leave the building, there's a bit of confusion and The Explosives Expert ends up in the driving seat. The Driver is in the back! The police are one block away. Do you all get out, rearrange, let the driver get in the front? Or do you just start driving? The Explosives Expert may not know the exact route but, remember, The Driver is right there, able to give directions.

And that is literally exactly what developing a software product is like.

Product

The role of the Product Owner is fairly well known, well defined and ubiquitous across agile teams. But we know that everybody in the team is responsible for the product. The PO might be an expert in certain areas of understanding the customers' mindset or prioritisation but everyone is responsible for the product. Everyone owns the product but, in this instance, there is one person with the title Product Owner.

Quality

In the same way, everyone working on a product is responsible for the quality. If you're working on the database and you find that the drop-down menu occasionally disappears, there are multiple ways you could deal with it.

- You could just ignore it. It's not the databases's fault, after all.

- You could file a ticket in the system for someone else to pick up.

- You could figure out a reproducible test-case.

- You could pop open the web inspector and check the console, poke around a bit.

- You could check out the code and fix it.

There are a lot of ways you could do this, depending on your abilities and your available time but whatever you do – as long as it's not just ignoring the problem – you are taking a step to improve the quality of the product.

The QA might be an expert in writing test cases or have the kind of brain that would think of using a base64-encoded png as a username but that doesn't mean quality begins and ends with them. They are the Quality Owner. Everyone is responsible for quality, but it's the Quality Owner's priority.

Architecture

And so we come to the Architecture Owner. Even now, a couple of decades after the Agile Manifesto with repeated swings for and against it, Agile and Architecture have an uneasy relationship or, at least, a poorly-defined one.

The image of The Architect as the keeper of the book, the writer of the specification still triggers rebellion in the minds of those who zealously misunderstand agile practices. Of course, there can't be a predestined development plan when the destination is unknown. But that doesn't mean you blindly run headlong into every problem. You look around, you keep aware of your surroundings, of other projects. If nothing else, you stay aware of your situation to know if you're doing something you've done before. If you are, you can look at how you solved this in the past and do the same or better this time round. This is everyone's responsibility but it's also Architecture.

"The last time I did a job in this town, the bank had a silent alarm system. You might want to cut the wires on this one before it's triggered this time."

Agile architecture is about looking a little bit forward and a little bit back. How will this work next year? How did we solve it last year? Is another team working on the same thing? Did they? Will they? Any prototyping done to figure out the next step of the journey is architecture.

Just as development, quality, product, design, business are all essential parts of any project, so is architecture. The Architecture Owner doesn't only do architecture and isn't the only person to do architecture tasks. It is merely their priority. They may have additional expertise or experience, they may be faster at building prototypes or drawing boxes-and-arrows but they are as integral a part of the product development team as anyone else.

To be effectively agile, everyone should care ultimately about creating the product.

Practicality

This is all good in theory. What does this actually mean? Is 'Architecture Owner' a full-time job? Does an architect architect all day long?

Well, is Product Owner a full-time job? QA? Explosives Expert? For a lot of teams, it is. But not all. A particularly small team might have an Engineer who acts as part-time PO. A larger team will have a dedicated person for each role. What you are is not what you do. The whole point of this is that being the owner of a domain doesn't make you solely and exclusively responsible.

In many cases, the person who assumes the AO role will be a strong engineer who can jump on any part of the codebase and be effective quickly (because in addition to being experienced engineers, they understand the architecture and the motivations behind the architecture) and who coaches others to think more architecturally and be effective quickly.

Notes

In the decade or so since I first wrote this, the role of architecture in agile has come and gone and come back a couple of times. I find myself directing people to the same articles repeatedly so I figured it was time to update and publish this.

There are many other articles on agile architecture, most of which are written much more seriously than this.

-

Microbots

Another April, another album.

-

Art Auction

I rarely sell my original paintings, preferring instead to make prints. This does mean I have shelves full of artwork I'll have to do something with one day.

I did, however, donate the original of this painting here to a fundraising auction.

If you'd like to find out more about the auction (and possibly bid), check out:

-

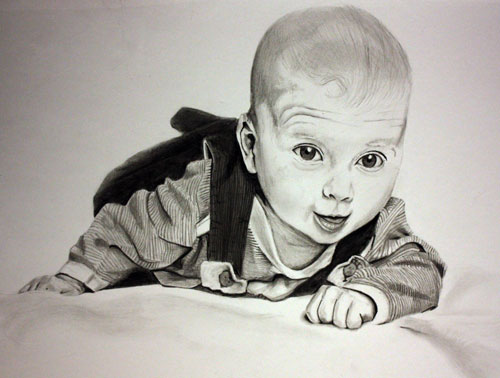

Painting a children's book

Here's a quick time-lapse of me painting a page from a children's book.

-

Probably Not A Robot

I made another album…

This is for all those people who have wondered at some point whether they are actually robots. If you were, would you know? What if your programmer wrote your software so that you wouldn't figure it out?

As with all my albums, it's free to download so you have no reason not to.

-

The Great British Web App

I think I've figured out the next break-out TV competition show: The Great British Web App

Round 1: The Microsite

Each team is presented with a PSD and a Word doc. You've got 1 hour to slice up the images, mark up the content and deploy a microsite advertising an event. The judges are harsh on anyone demoing on localhost.

Round 2: The Magazine

Using the tools, frameworks and languages of your choice, design, develop and deploy a magazine-style web site with full CMS and social media share buttons.

Teams are judged on semantic markup, SEO friendliness and accessibility audit score.

Round 3: The Masterpiece

Your chance to show off. You have 4 hours to build the shiniest, scrollbar-misusing, WebGL-heavy, experimental web masterpiece possible to match the client brief "Anything, as long as it pops!".

You only get points if it's mobile-friendly and works on IE 6.

Prize

The Winners of the Grand Finale get a copy of Dreamweaver 4 and enrolled in a Coursera course on how to retrain as a baker...

Bonus Technical challenge for the celebrity edition

Rebuild a classic HTML element using the latest web technologies - implement

<marquee>using Web Components or<blink>using Web Sockets. -

Web Components vs Vue Components

I've been doing a lot of work in Vue recently so when I was asked to evaluate using Web Components on an upcoming project, I approached it with a Vue-ish mindset.

I've not really kept my eye on Web Components for the last couple of years beyond seeing original proposals being superceded and import specs being replaced. Just seeing those things on the periphery were enough to make me think "Meh... I'll have a look later when it's all died down".

Now, I know that Web Components !== Vue. But, I was interested in what knowledge could be migrated from one technology to the other. If I were building an actual web app, I'd definitely use Vue. Building a boxful of reusable, shareable UI elements, though... let's find out.

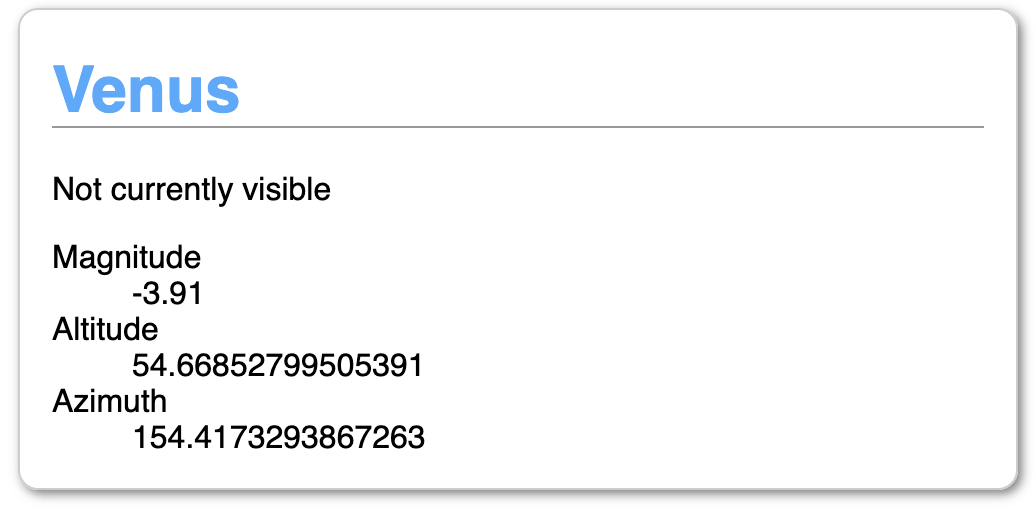

I'm not going to build anything too complex to start with. How about an "Planet Summary" panel? A simple panel that renders summary information about a planet given a JSON object.

I have an API that returns JSON information about where in the sky to find planets when given your latitude and longitude. For example, if you're standing slightly south of the centre of Berlin and want to know where Venus is, you'd make this request:

https://planets-api.awsm.st/venus/52.5/13.4

And the response would be:

{ "name": "Venus", "number": 1, "colour": 1, "colleft": 24, "colright": 25, "alt": 13.043427032890424, "az": 290.3495756869397, "dec": 22.661411404345362, "ra": 110.21545618074397, "H": 98.18491228623316, "eclon": 108.59563862950628, "eclat": 0.5200939814134588, "illum": 0.9918628383385676, "r": 0.7192422869900328, "dist": 1.7155717469739922, "mag": -3.909377586961354, "elong": 0, "pa": 0, "p": 1, "description": { "altitude": "Barely above the horizon", "azimuth": "West" }, "visible": false }In this case, it determines Venus isn't visible because, even though it's above the horizon, it's not bright enough given the time of day (about 6pm).

We want to make a little UI card that displays this information.

Mapping Vue features to Web Components

Vue Web Component Notes name class name data instance properties props attributes these are not reactive by default. Attributes have to be specifically observed (see watch).watch attributeChangedCallback first, register your watched attributes with `observedAttributes` then process them in attributeChangedCallback computed getters methods class methods mounted connectedCallback called async so the component may not be fully ready or may have been detached. Use Node.isConnected to protect against calling a dead node componentWillUnmount disconnectedCallback style block style block inside template styles are scoped by default template block literal template JS literal templates (backtick strings) are nowhere near as powerful for templating as an actual template library. Vue template features such as `v-for` can be replicated with vanilla JS but a single-purpose template library (such as `lit-html`) is a good idea. NOTE: I am deliberately not using Webpack. I realise that actual applications would be using additional tooling but I want to see what we can do without it.

The first thing that clicked with me was when I realised that computed properties and getters are identical. Nice.

Here's Vue code to return the planet name or a default string:

computed: { name() { return this.planet.name || ''; }, }And Web Component:

get name() { return this.planet.name || ''; }Well, that was easy (and trivial).

The same goes for defining the custom element for use in the DOM

Vue:

components: { "planet-summary": PlanetSummary }Web Components:

customElements.define("planet-summary", PlanetSummary);The only real difference at this level is the data binding. In Vue, props passed from a parent element to a child are automatically updated. If you change the data passed in, the child updates by default. With Web Components, you need to explicitly say you want to be notified of changes.

This is basically the same as setting a

watchin Vue. Data that changes in a slightly less tightly-bound fashion can be watched and the changes trigger updates further down.Watches

Watches in Vue:

watch: { altitude(newValue, oldValue) { ... } }With Web Components, registering a watch and reacting to changes are separate:

static get observedAttributes() { return ['altitude']; } attributeChangedCallback(name, oldValue, newValue) { if(name === 'altitude') { ... } }Templating

Vue contains full templating support – for loops, conditional rendering, seamless passing around of data. Natively, you have literal templates and that's about it.

To create a list of planets, you'd use the

v-fordirective and loop over your planets array.Vue:

<ul> <li v-for="planet in planets"> <planet-summary :planet="planet"></planet-summary> </li> </ul>Web Component

<ul> ${this.planets.map(planet => ` <li> <planet-summary planet='${JSON.stringify(planet)}'></planet-summary> </li> `).join('')} </ul>The

joinis there because we're creating an HTML string out of an array of list items. You could also accomplish this with a reduce.Boilerplate

With Web Components, your component lives in the Shadow DOM so you are responsible for updating it yourself. Vue handles DOM updates for you.

Here is a basic render setup:

constructor() { super(); this._shadowRoot = this.attachShadow({ mode: "open" }); this.render(); } render() { this._shadowRoot.innerHTML = ''; this._shadowRoot.appendChild(this.template().content.cloneNode(true)); }This needs to be explicitly included in every component as they are standalone whereas Vue automatically handles DOM updates.

CSS

Due to the fact that Web Components live in a separate document fragment, There are complications around sharing styles between the host page and the component which are nicely explained on CSS Tricks. The biggest benefit, on the other hand, is that all styles are scoped by default.

Vue without Webpack (or other tooling) also has its own complications around styles (specifically scoping styles) but if you're building a Vue application, it is much more straightforward to specify which styles are global and which are scoped.

Summary

Here is the Vue Planet Summary and the source of planet-summary-vue.js.

Here is the Web Component Planet Summary and the source of planet-summary.js.

Bonus: here's a Planet List Web Component which includes the Planet Summary component. And the source of planet-list.js

All in all, pretty much everything between basic Vue Components and Web Components can be mapped one-to-one. The differences are all the stuff around the basic construction of the components.

I'd say that if you're looking to build completely standalone, framework-free reusable components, you'll be able to accomplish it with the Web Components standard. You just might have a bit of extra lifting and boilerplate to deal with.

On the other hand, if you're already planning on building a full web application with data management and reactive components, use the tools available to you.

-

Sponsored Events on the Blockchain

Sponsored Event

In my day job, I’m responsible (among other things) for our efforts to integrate block chain and supply chain. Most of this relies on Smart Contracts. In order to learn more about them, I did a project last year that let me get in-depth.

The idea came about while my wonderful other half was organising a sponsored walk – Museum Marathon Edinburgh. It should be possible to create a sponsored event then manage funds and pledges through a smart contract. Unfortunately, I didn't get the project completed in time but I did manage to build Sponsored Event (possibly my most uninspired project name ever).

This can be used to manage, collect and distribute donations for any kind of charity event – sponsored walk, climb, run, pogo-stick marathon, etc.

No more chasing people for money after the event. No need to worry about how or whether the money makes it to the charity.

This contract also allows cancellation and withdrawal from the event. In that case, the participant's initial sign-up fee is transferred to the receiving charity but any pledges are returned to the sponsor.

Additional information about the event (description, title, images) should be stored off-chain in another database.

I built it almost 12 months ago but just updated it to use the latest version of Solidity. Due to the fast-paced nature of these things, it may or may not still work. Who knows?

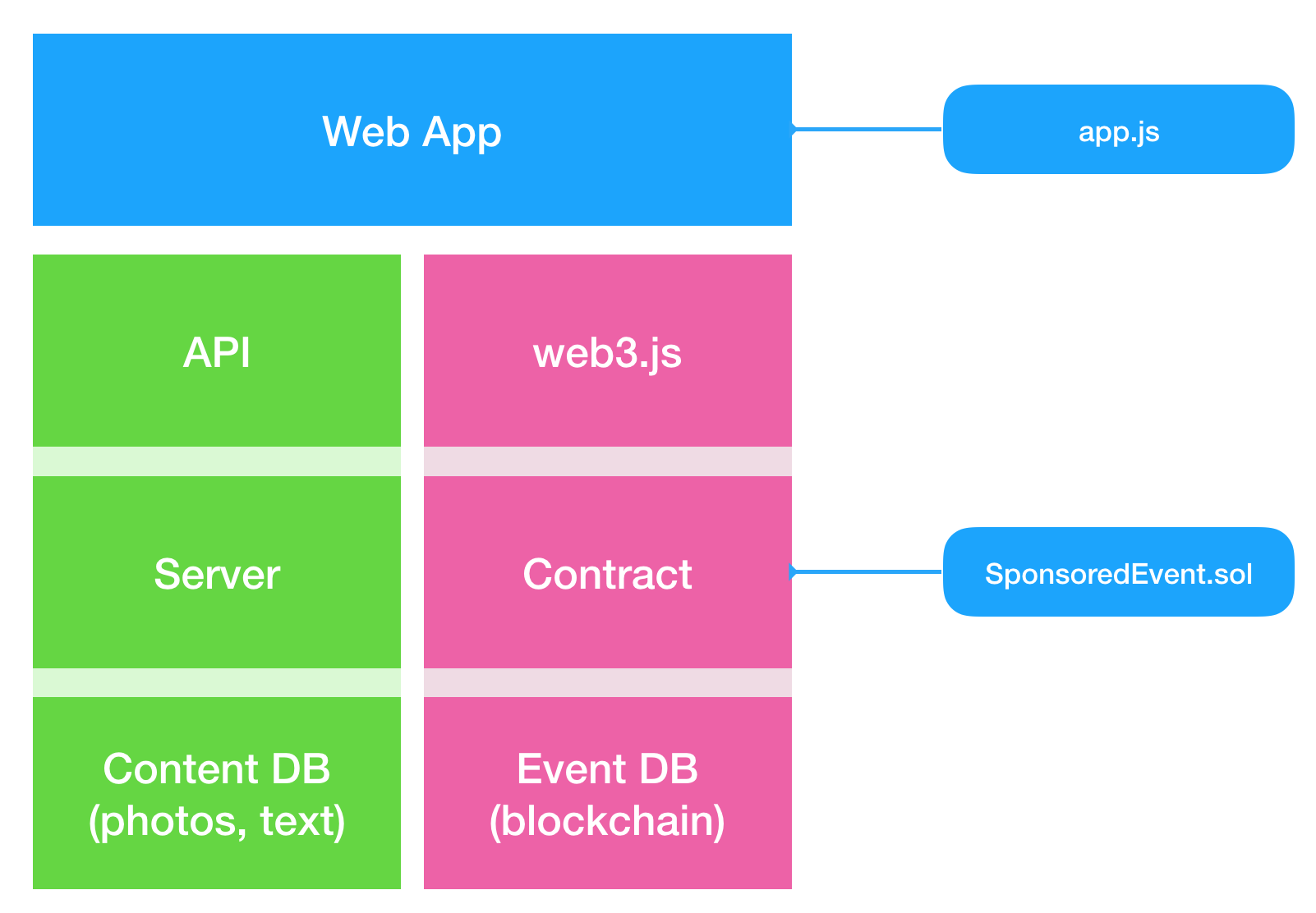

Web App Structure

The web app uses

web3.jsto interact with the smart contractSponsoredEvent.sol. It can also interact with a separate content database to keep as much non-critical data off the blockchain as possible.

Key Concepts

The Event

An event for charity where someone must complete all or part of something. In exchange someone else pledges to give money to a recipient.

The Recipient

The charity or body receiving the funds at the end. They don't need to do anything except have an account capable of receiving the funds.

The Organiser

The person creating the event specifying the name, date, description and designating the account of The Recipient. This account is the owner of The Event.

The Participant

The person actually taking part in The Event. This person signs up for the event and commits to taking part. They are given a unique URL which The Sponsor can use to pledge money. Participants are charged a sign-up fee.

The Sponsor

The source of the funds. This party has promised to donate money to The Recipient if The Participant takes part in The Event. They can include a message along with their pledge.

Cancellation

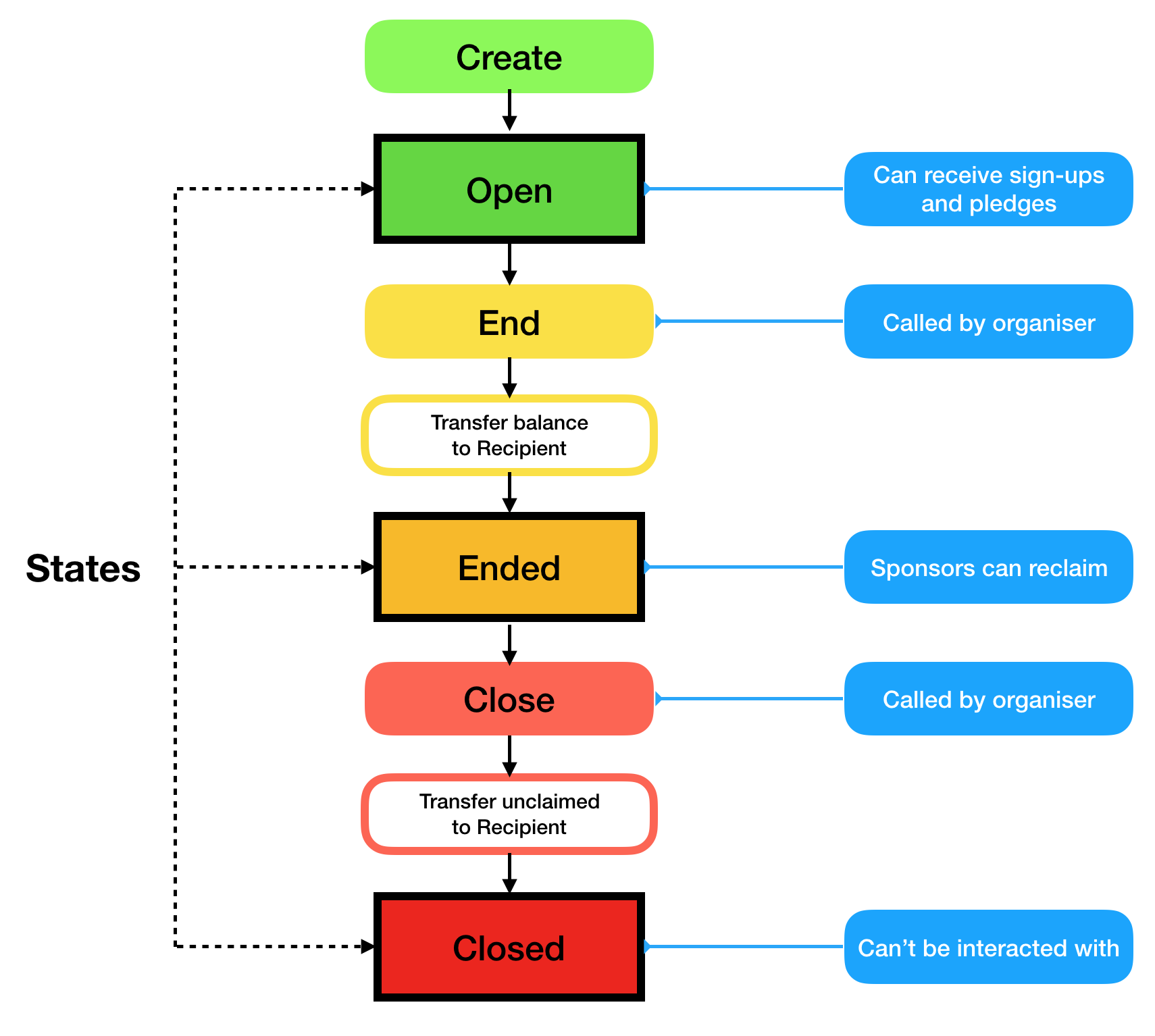

If the event is cancelled, all pledged money is automatically returned to The Sponsor. Sign-up fees are returned to The Participant.

Withdrawal from the event

If The Participant withdraws, all money pledged to them is automatically available for The Sponsor to reclaim. The participant's sign-up fee is not returned.

Ending the event

The Organiser can mark an event as Ended. This will transfer completed pledges and sign-up fees to The Recipient.

Retrieval of funds

Once the event has ended, The Sponsor is able to reclaim any funds donated to Participants who did not complete the event. The funds are not automatically returned as The Event may not have enough to cover the transaction fees.

Closing the contract

After a period of time following the end of an event, The Organiser will close the event. This will transfer any remaining balance to The Recipient.

Contract Lifecycle

Each Sponsored Event contract starts when The Organiser creates it and deploys it on the blockchain. From that point, The Participants can sign up and The Sponsors can make pledges.

Source

You can get the source on github and deploy it against a test network or run Ganache locally. Whatever you do, don't throw actual money against it. That would be a terrible idea.

-

It's Art, I tell you!

After many years of meaning to do it and not actually doing it, I decided to open an Etsy store. It's not my first online print store. In fact, one of the key reasons I ever learned HTML, CSS and PHP was to build an online store for my artwork back in... 2002? Seriously? Seventeen years ago...?

Anyways... I've put in a few prints so far, mostly from the Octoplural series of paintings although my current favourite is the My Day Flowchart.

I really like how I could tie Etsy together with Printful so the prints are generated on demand rather than me holding a lot of stock which is how we did it back in the olden days...

-

I made a thing. Now what?

Anyone who knows me knows I'm all about solving problems. That's my thing. There's a problem, here are the facts, here's the solution. It's almost always a technical solution.

So when I was presented with the problem of making it easier to make background music for YouTube videos, I built Harmonious Studio.

Technically, its a good solution – it lets you mix individual loopable instruments into a single track. Behind the scenes, it uses a the Web Audio API to manage the individual tracks in the browser and ffmpeg to prepare the final high-quality download.

The question is: what now?

The original plan was to allow others to upload their tracks and create a marketplace for music – basically positioning Harmonious Studio as "Shutterstock for Music". There are several options for this – monthly subscription for unlimited downloads, fee per premium track, fee per download.

There are a few problems with this, however.

1. Free is better

There is a huge amount of free music available online. Every week there's a post on /r/gamedev where a composer gives away thousands of tracks for free. The majority of responses I got from the feedback form I asked for on Harmonious fell into the segment "Yes, I use background music. No, I'd never pay for it".

2. Good enough is good enough

The idea was that content creators would be able to make music to fit their content exactly. However, getting something instantly for free that almost fits is preferable to making something custom that costs time and money. Kind of obvious when you think about it.

3. If it works, keep it

The other piece of feedback I got from YouTubers was that, once they've found a piece of music that works, they're more likely to copy-paste it into the next video than get a new one. Once they've got 3 or 4 'go-to' tracks, they've got everything they need for most kinds of videos.

So... what now?

It's a good technical solution to a problem without a good market. This is usually the point where the insightful entrepreneur pivots and relaunches using the tech in a completely new way. Anyone have any suggestions about how to do that?

-

Harmonious Studio

Some of you might know that my wonderful other half reviews a lot of books on Youtube and that I occasionally make music. So I, naturally, help her on the videos by providing background music for her videos.

After a couple of months of re-recording or remixing existing tracks, I realised the key was that – as a content creator – she wanted to have more control over the music in her videos than just picking one of my existing instrumentals. It occurred to me that not everyone that makes videos, games or podcasts has access to a musician with recording equipment but might want the same kind of control over their music.

There are plenty of royalty-free music sites around but a lot of them suffer from the problem that there are 20,000+ tracks to choose from and you might need to go through a few thousand of them to find the right track.

To solve this, I built my latest project: Harmonious Studio.

A 'set' is a collection of individual tracks built around a single basic loop. To create your individual mix, you pick a set, switch tracks on or off and download a loopable piece of music that can be dragged into whatever you use to create your videos or podcasts. All the tracks in a set work together but different combinations can change a piece from light and happy to dramatic and angry to wistful and relaxed.

Here are a few examples of mixes built from the same set:

For Game Makers

I'm also hoping this is useful for game developers. Fundamentally, it's a way to create lots of variations of music that go well together – some dramatic, some calm, some exciting – these variations can be tied to metrics and areas in a game and smoothly and transparently change with the game's mood and atmosphere.

For musicians

The next phase is to open up the platform to other musicians to enable them to add tracks to an existing set or create an entirely new set.

For now, all tracks are licensed for free under CC-by 4.0 but, if it turns out to be useful, musicians will be able to offer their tracks for sale. Those buying the track will pay a single fee for the mix and the fee will be split between the artists who created the tracks in the mix.

If you're a content creator, know a content creator, have ever thought about being a content creator or even have simply read this far in this post, please check it out and give me some feedback.

-

HERE Tracking

You'll have noticed I haven't updated much recently. Even when I did, if was with distinctly non-tech stuff. The reason being I've been busy. Not "I've got a big to-do list" busy or "I've had a couple of browser tabs open for a few weeks that I'll get round to eventually" busy, either. I've got a text-file to-do list that's been open, unsaved in the background since January 2017 and there are a couple of background tabs I've been meaning to get round to reading since late 2014. Really.

What's been keeping me busy?

Easy answer: HERE Tracking.

A couple of years back, a few of us got interested in how IoT devices could work with location. What's the smallest, simplest device we can connect to the cloud and pin point on a map? Within a few weeks, we had a basic cloud and at CES in January this year, we launched a fully-fledged product. In that time, I've moved from 'prototyper who built version 0.1 on his laptop during the Christmas holidays' to something roughly equivalent to CTO of a medium-sized tech company. Not bad.

What's it do?

In essence, a small IoT device with some combination of GSM, WiFi and Bluetooth does a scan to find out what wireless networks, Bluetooth beacons and cell towers are visible and how strong they appear. They send their scan to HERE Tracking where it gets resolved into a latitude/longitude and then saved. The best bit is that it works indoors and outdoors.

Look, we've even got our own shiny video with cheesy voiceover!

And another that shows what it actually does!

There are a bunch of other features as well such as geofences, notifications, filtering, etc. but the main focus is this large-scale ingestion and storage of data.

At this point, our original Tracking team has grown to include HERE Positioning (the clever people who actually figure out where the devices are) and HERE Venues (we recently acquired Micello). By combining, the Tracking, Positioning and Venues bits together, we can follow one of these devices from one factory, across the country on a truck or train, overseas, into another country, into another factory, out into a shop... and so on.

-

HTTP Status Codes: The Album

-

The Same Side of Two Different Coins

-

Real life is busy

For reasons that this margin is too narrow to contain, I don't have the time to maintain a lot of my open source projects now.

I don't like to abandon them, however. I should have probably done this a few months ago but better late than never.

I'm looking for someone to take over

Hardyandcsste.st.Hardy

https://github.com/thingsinjars/Hardy

Automated CSS testing framework. Back when I used to do CSS for a living, I got interested in the concept of visual regression testing. Not finding the right tool for what I had in mind, I built Hardy. It was quite popular for a while but has been quite neglected for about a year for various reasons. The main reason being that I don't actually write CSS any more. Well, not for a living. The tool worked for me while I needed it and when I didn't, I stopped updating it.

If anybody would like to take over the project and keep it going, get in touch.

csste.st

https://github.com/thingsinjars/csstest

This was supposed to be a community-driven collection of information about CSS testing - tools, techniques, tutorials, etc. I'd love it if it could continue like that but I had a difficult time keeping up my enthusiasm after the umpteenth demand from some "CSS testing as a service" start-up founder demanding a favourable write-up. Quite a few of these founders also wanted some kind of guarantee that I would continue to maintain Hardy for free so they could build a business model around selling it.

Again, if anyone has ideas for how to take the project forward, let me know.

I'm going to renew the domains hardy.io and csste.st for another year to see if I can find maintainers but after that, I'll retire them if I haven't found any.

-

PrologCSS

Seeing as both Prolog and CSS are declarative languages, I found myself wondering if it would be possible to create a mapping from one to the other. It was an interesting thought experiment that quickly found itself being turned into code.

Way back when, Prolog was actually one of the first languages I learned to program in. It had been a while since I'd last used it for anything (a chess endgame solver in high school, I think) so I looked up an online tutorial. The following example is derived from section 1.1. of Learn Prolog Now

Simple rules

If you think of Prolog facts as denoting true/false attributes of elements, you can consider every known item in a KnowledgeBase (KB) as a DOM Element. For example:

mia.Is equivalent to:

<div id="mia"></div>While

woman(mia).Equates to:

<div id="mia" class="woman"></div>You can make multiple statements about an item in the KB:

woman(jody). playsAirGuitar(jody).Which is mapped to:

<div id="jody" class="woman playsAirGuitar"></div>You can then represent these facts using visual attributes:

.woman { background: yellow; } .playsAirGuitar { border: 1px solid black; }The only real issue is that CSS values can’t be aggregated. If they could be, you could always use the same attribute (e.g. box-shadow) and combine them:

.woman { box-shadow: 1px 1px 0 red; } .playsAirGuitar { box-shadow: 2px 2px 0 red; }You'd want this to render two box-shadows, one with a 1px offset and one with a 2px offset.

Instead, you have to use a unique CSS attribute for each class of facts. However, for the simplest examples, It’s not too complicated...

If you want to query the KnowledgeBase, you need to map a standard Prolog query such as:

?- woman(mia).Into the a different output mechanism: HTML.

The response is visualised in HTML and CSS using the following rules:

- There is an element with id "mia"

- There is a class "woman"

- The element with ID "mia" has the class "woman"

In the demo below, you can read this by verifying that the #mia div has a yellow background. Done.

Here's the complete KnowledgeBase for the first section of "Learn Prolog Now".

<!-- woman(mia). --> <div id="mia" class="woman"></div> <!-- woman(jody). --> <!-- playsAirGuitar(jody). --> <div id="jody" class="woman playsAirGuitar"></div> <!-- woman(yolanda). --> <div id="yolanda" class="woman"></div> <div id="party"></div>And here are the queries that could be answered by looking at the visual output: